(I wrote this in 2011 as a draft and then forgot about it. It still reads OK, so here it is, basically unchanged, and without the extra sections I’d probably originally planned to add.)

Even though big web sites use lots of so-called “enterprise” technology, and big companies and government departments create browser-based applications, there’s still a huge chasm between web developers and enterprise developers.

We’ve been flooded with clichés and stereotypes about both sides — enterprise developers do everything the hard way, web developers don’t understand security and reliability, etc. — but it’s best not to take that too seriously. A lot of my consulting work and personal projects straddle the line between Enterprise and Web, so I’ve had a decade and a half to observe people and processes on both sides.

I’ll post more about this later, but here are two differences that strike me right away:

- Enterprise does a lot of integration

- Enterprise doesn’t have many rock stars

Enterprise does a lot of integration

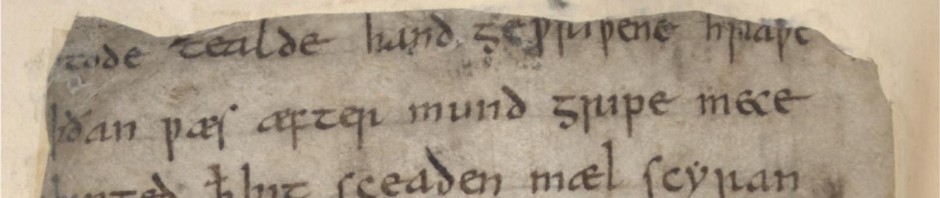

Enterprise IT projects are always about integration. We’re not talking about fun integration with a REST API on the web, but nasty, ugly integration with legacy systems as old as your parents, using custom data formats and unpronounceable character encodings out of the Mad Men era, like EBCDIC (if you’re lucky).

In web dev, whether you’re using SQL or a noSQL approach, you almost certainly own and manage your application’s data (unless you’re building one of those doomed Twitter or Facebook mashups). In an enterprise project, most of your data is coming from somewhere else (the 1970s mainframe at the Oakland data centre, the 1995 PowerBuilder app used by 350 analysts in Hong Kong, etc.). It comes veeeeery slooooowly, and it’s unreliable, and it’s almost guaranteed to be out of sync with the data you’re receiving from other sources (so forget about strict referential integrity). There’s nothing you can do about that, because huge parts of the enterprise are based around those legacy systems, and there’s no one left alive who knows how to change them anyway. Your whole $50M system might depend on data sent as a CSV email attachment every Tuesday night, and rejected 55% of the time because it’s malformed.

There’s a lot of snake oil out there that promises to “fix” this problem — ESB products, ETL products, WS-* products, etc. — but these all address the easy parts, near the middle, not the hard parts, at the edges (and sometimes they make even the middle more difficult than it needs to be).

The benefit of all this mess, though, is that an enterprise application designer is always thinking about distributed data, something that web developers talk about don’t always really get. It’s hard to imagine a CMS like Drupal or WordPress — that naively assumes it can keep all the information that it presents in its own (preferably MySQL) database — coming out of the enterprise.

Enterprise doesn’t have many rock stars

Really, it’s true. Developers working for government, or Fortune 500 companies, on average, aren’t very good. Of course, there must be some real talent hiding here and there, but on balance, coding for most enterprise employees (as opposed to outside contractors) is a 9–5 drudge job that they’re happy to leave at the end of the day. They’re nice people, but they’re not passionate about IT the way you and I are, and they’re not interested in becoming so.

This talent deficit has pretty serious implications for building projects in-house and for maintaining projects from any source — it means that enterprises micro-manage their developers in a way that a hotshot web developer would never tolerate. Part of that is just the overhead of working in a big team — even web companies do code reviews and write detailed requirements when they get big enough — but a lot of it is just a matter of not trusting developers to do the right thing on their own. There are huge numbers of tools out there to count, manage, audit, poke, prod, and otherwise abuse enterprise developers, and those tools are more widely-available in Java than in any other environment, hence the enterprise’s love of Java.

It’s hard to know where the fault is here: would good developers work for enterprise if the working conditions were better, or would they still run off to small startups or consulting for the variety and adrenaline rush? Would bad enterprise developers grow into average or even good ones if they were given more trust and autonomy? In any case, if you’re designing an application for enterprise, don’t expect things that seem trivially simple to you to seem simple to the developers.

The result of all this is that, even if you have a hotshot team of consultants and developers initially building an enterprise system, you have to design it so that mediocre technicians and developers can maintain it for the 10-30 years after you all leave. The enterprise has to be able to hire people with (generally useless) certifications as “Sharepoint specialist,” “Oracle DBA”, or whatever, and the system has to contain few surprises for them. Nothing cutting-edge, please, because they probably didn’t cover it in their certification courses.